Multimodal Support in Chrome's Built-in AI

I'll also note my use `await createImageBitmap(file);` isn't necessary as I can pass the file object directly to it. I've got Thomas Steiner of the Chrome team to thank for pointing that out.

It's been a few weeks since I blogged about Chrome's built-in AI efforts, but with Google IO going this week there's been a lot of announcements and updates. You can find a great writeup of recent changes on the Chrome blog: "AI APIs are in stable and origin trials, with new Early Preview Program APIs".

One feature that I've been excited the most about has finally been made available, multimodal prompting. This lets you use both image and audio data for prompts. Now, remember, this is all still early preview and will likely change before release, but it's pretty promising.

As I've mentioned before, the Chrome team is asking folks to join the EPP (early preview program) for access to the docs and such, but it's fine to publicly share demos. You'll want to join the EPP for details on how to enable these APIs and use the latest Chrome Canary, but let me give you some examples of what you can do.

Basic Image Identification

At the simplest level, to enable multimodal inputs you simply tell the model you wish to deal with them:

session = await LanguageModel.create({

expectedInputs:[{type: 'image'}]

});

You can also use audio as an expected input but I'm only concerned with images for now. To test, I built a demo that simply let me select a picture (or use my camera on mobile), render a priview of the image, and then let you analyze it.

HTML wise it is just a few DOM elements:

<h2>Image Analyze</h2>

<div class="twocol">

<div>

<p>

<input type="file" capture="camera" accept="image/*" id="imgFile">

<button id="analyze">Analyze</button>

</p>

<img id="imgPreview">

</div>

<div>

<p id="result"></p>

</div>

</div>

The JavaScript is the important bit. So first off, when the file input changes, I kick off a preview process:

$imgFile = document.querySelector('#imgFile');

$imgPreview = document.querySelector('#imgPreview');

$imgFile.addEventListener('change', doPreview, false);

// later...

async function doPreview() {

$imgPreview.src = null;

if(!$imgFile.files[0]) return;

let file = $imgFile.files[0];

$imgPreview.src = null;

let reader = new FileReader();

reader.onload = e => $imgPreview.src = e.target.result;

reader.readAsDataURL(file);

}

This is fairly standard, but let me know if it doesn't make sense. In theory I could have done the AI analysis immediately, but instead I tied it to the analyze button I showed up on top. Here's that process:

async function analyze() {

$result.innerHTML = '';

if(!$imgFile.value) return;

console.log(`Going to analyze ${$imgFile.value}`);

let file = $imgFile.files[0];

let imageBitmap = await createImageBitmap(file);

let result = await session.prompt([

'Create a summary description of the image.',

{ type: "image", content: imageBitmap}

]);

console.log(result);

$result.innerHTML = result;

}

So, remember that $imgFile is a pointer to the input field which is using the file type. I've got read access to the selected file, which is turned into an image bitmap (using window.createImageBitmap), and then passed to the AI model. My prompt is incredibly simple - just write a summary.

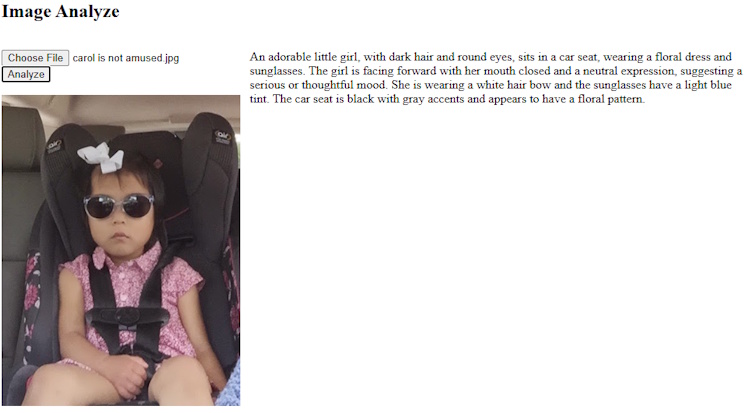

As I assume most of yall can't actually run this demo, let me share with you a few screenshots showing some selected pictures and their summaries.

Yes, I agree, she is adorable.

This one was pretty shocking in terms of the level of detail. I'm not sure if that is LAX, and not sure if those are exact matches on the airplane types, but damn is that impressive.

This is pretty good as well, although I'm surprised it didn't recognize that it was a particular Mandalorian, Boba Fett.

Here's the complete CodePen if you want to try it out, again, given that you've gone through the prerequisites.

See the Pen MM + AI by Raymond Camden (@cfjedimaster) on CodePen.

More Guided Indentification

Of course, you can do more than just summarize an image, you can also guide the summarization, for example:

let result = await session.prompt([

'You report if an image is a cat or not. If it is a cat, you should return a wonderfully pleasant and positive summary of the picture. If it is not a cat, your response should be very negative and critical.',

{ type: "image", content: imageBitmap}

]);

While a bit silly, there's some practical uses for this. You could imagine a content site dedicated to cats (that's all I dream of) where you want to do a bit of sanity checking for content editors to ensure pictures are focused on cats, not other subjects.

Here's two examples, first a cat:

And then, obviously, not a cat:

For completeness sake, here's this demo:

See the Pen MM + AI (Cat or Not) by Raymond Camden (@cfjedimaster) on CodePen.

Image Tagging

Ok, this next demo I'm really excited about. A few weeks ago, the Chrome team added structured output to the API. This allows you to guide the AI in regards to how responses should be returned. Imagine if in our previous demo we simply wanted the AI to return true or false if the image was of a cat. While we could use our prompt for that, and be really clear, there's still a chance the AI may feel creative and go a bit beyong the guardrails of your prompt. Structured output helps correct that.

So with that in mind, imagine if we asked the AI to not describe the image, but rather provide a list of tags that represent what's in the image.

First, I'll define a basic schema:

const schema = {

type:"object",

required: ["tags"],

additionalProperties: false,

properties: {

tags: {

description:"Items found in the image",

type:"array",

items: {

type:"string"

}

}

}

};

And then I'll pass this schema to the prompt:

let result = await session.prompt([

"Identify objects found in the image and return an array of tags.",

{ type:"image", content: imageBitmap }

],{ responseConstraint: schema });

Note that the prompt API is somewhat complex in how you can pass arguments to it, and figuring out the right way to pass the image and the schema took me a few tries. The documentation around this is going to update soon.

The net result from this is a JSON string, so to turn it into an array, I can do:

result = JSON.parse(result);

In my demo, I just print it out, but you can easily doing things like:

- For my cat site, if I don't see "cat", "cats", or "kitten", "kittens", raise a warning to the user.

- For my cat site, if I see "dog" or "dogs", raise a warning.

To be clear, this, and all of the Chrome AI features, should focus on helping the user, and shouldn't be used to prevent any action or as a security method of some sort, but having this here and available can help the process in general, and that's a good thing.

Here's two examples with the output:

And here's the complete demo:

See the Pen MM + AI (Tags) by Raymond Camden (@cfjedimaster) on CodePen.

More Resources

Before I wrap up, a few resources to share, thanks to Thomas Steiner (who also helped me a bit with my code, thanks Thomas!), from Google IO:

- Practical built-in AI with Gemini Nano in Chrome

- The future of Chrome Extensions with Gemini in your browser

Photo by Andriyko Podilnyk on Unsplash