First off - I apologize for the somewhat lame title. It occurred to me today that it's been a while since I played with new and upcoming web standards, and as I recently discovered Chrome was introducing some really cool stuff around camera support, I thought it would be fun to explore a bit. Specifically I'm talking about the "Image Capture" API. This API provides access to the camera and supports taking pictures (of course) as well as returning information about the camera hardware itself. Looking into this API led me to another new API, MediaDevices, which replaces the older getUserMedia that you may have seen in the past.

I've blogged about this topic in the past. Back in 2013, I wrote "Capturing camera/picture data without PhoneGap". In that post I made use of the capture of the input tag to let users select from their device camera. That post got so much traffic, I revisited it last year: "Capturing camera/picture data without PhoneGap - An Update". In that post, I looked more at the capture argument and the various different ways of using it.

With the Image Capture API, we've got something we can use directly in JavaScript without an input tag. In order to use it, you need to either enable an "origin trial" in Chrome 56, which will be out very soon, or use Canary and enable "Experimental Web Platform" in flag. I tested with Canary - which as a reminder is also available on Android devices. You can't use this at all on iOS.

Here is a simple example, taken from the excellent blog post by Google.

First - request access to the camera. This gives a visible prompt to the user:

navigator.mediaDevices.getUserMedia({video: true})

.then(gotMedia)

.catch(error => console.error('getUserMedia() error:', error));

First and foremost - notice it is promised base. Woot. That argument to getUserMedia is how we tell the browser what we want to access. This will also impact the warning the end user gets. As an aside, you can go batshit crazy in that argument. While I was digging around the MDN docs for getUserMedia, I found out you have options for the resolution you want, and by options, I mean min, max, desired and required. That's freaking cool. I can imagine for someone taking pictures for legal, or maybe scientific purposes, you may want to demand a certain level of fidelity before working with the camera. This is also where you can ask for the front or rear facing camera, and again, what's cool is that your code can say you want the rear camera or you require it. It's a bit off topic for this post, but later on click that link and see how precise you can get.

Anyway, once you have access to the device camera, taking a picture is trivial:

$img = document.querySelector('#testResult');

const mediaStreamTrack = mediaStream.getVideoTracks()[0];

const imageCapture = new ImageCapture(mediaStreamTrack);

imageCapture.takePhoto()

.then(res => {

$img.src = URL.createObjectURL(res);

});

In the sample above, $img is an img tag in the DOM. I simply ask for the picture and then display it.

The API also supports grabbing a frame (called, funny enough, grabFrame) from a video stream and returns a slightly different, lower resolution, form of the image.

Lastly, and this was the really fascinating part, you can ask for the camera settings (getPhotoCapabilities) and set options (like zoom) as well (setOptions).

So with that in mind, I built a simple demo that would demonstrate capturing an image as well as getting the capabilities of the camera. To make it even more interesting, I added in enumerateDevices, part of the MediaDevices API, which does pretty much what you think it does. Let's look at the code, front end first even though it's not doing much.

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8">

<title></title>

<meta name="description" content="">

<meta name="viewport" content="width=device-width">

</head>

<body>

<button id="takePictureButton">Take a Picture</button><br/>

<img id="testResult" style="max-width: 400px;max-height:400px">

<div id="capaDiv"></div>

<div id="devDiv"></div>

<script src="app1.js"></script>

</body>

</html>

Nothing much here except for a button and a few empty DOM items I'll fill up later. Now for the code - and to be clear, a lot of code here is taken/modified from the Google/MDN demos.

let $button, $img, $capa, $dev;

document.addEventListener('DOMContentLoaded', init, false);

function init() {

$button = document.querySelector('#takePictureButton');

$img = document.querySelector('#testResult');

$capa = document.querySelector('#capaDiv');

$dev = document.querySelector('#devDiv');

console.log('Ok, time to test...');

navigator.mediaDevices.getUserMedia({video: true})

.then(gotMedia)

.catch(error => console.error('getUserMedia() error:', error));

}

function gotMedia(mediaStream) {

const mediaStreamTrack = mediaStream.getVideoTracks()[0];

const imageCapture = new ImageCapture(mediaStreamTrack);

//sniff details

imageCapture.getPhotoCapabilities().then(res => {

console.log(res);

/*

hard coded dump of capabiltiies. i know for most

items there are keys under them, but nothing more complex

*/

let s = '<h2>Capabilities</h2>';

for(let key in res) {

s += '<h3>'+key+'</h3>';

if(typeof res[key] === "string") {

s += '<p>'+res[key]+'</p>';

} else {

s += '<ul>';

for(let capa in res[key]) {

s += '<li>'+capa+'='+res[key][capa]+'</li>';

}

s += '</ul>';

}

s += '';

}

$capa.innerHTML = s;

});

//sniff devices

navigator.mediaDevices.enumerateDevices().then((devices) => {

console.log(devices);

let s = '<h2>Devices</h2>';

// https://developer.mozilla.org/en-US/docs/Web/API/MediaDevices/enumerateDevices

devices.forEach(device => {

s += device.kind +': ' + device.label + ' id='+device.deviceId + '<br/>';

});

$dev.innerHTML = s;

});

$button.addEventListener('click', e => {

console.log('lets take a pic');

imageCapture.takePhoto()

.then(res => {

$img.src = URL.createObjectURL(res);

});

}, false);

}

From the top - I grab some DOM items to reuse later, than try to fire off a request to get a device. On my desktop, where I don't have a webcam yet, this throws an error. (Although I do have a mic, and if I asked for audio support instead, it should work just fine.) Once we get access to a camera device, I ask for the photo capabilities and print them to the DOM. I do the same for all the media devices, and finally, you can see a simple click handler to support taking pictures.

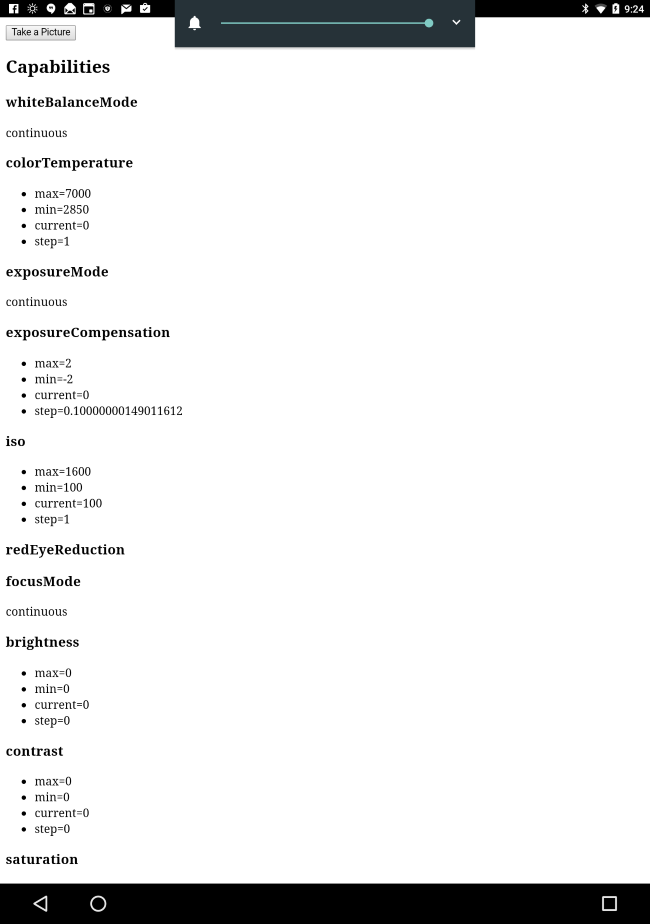

The level of detail on the photo capablities is pretty interesting. You get results for:

- White Balance Mode

- Color Temperature

- Exposure Mode

- ISO

- Red Eye Reduction

- Focus Mode

- Brightness

- Contrast

- Saturation

- Sharpness

- Image Height and Image width

- Zoom

- Fill Light Mode

For most of the above, you get min, max, current, and step values. Unfortunately, desktop Canary (on Mac) reported everything at zero, but Android's Canary reported good values. Here is a screen shot from my Pixel C:

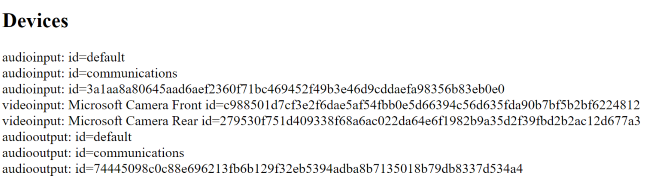

Here is the output from device enumeration on my Surface Book:

Ok, let's look at a demo!

Demo 1 #

Back in 2013 when I wrote that first post, my demo code made use of a cool library called Color Thief. Color Thief looks at an image and can return prominent colors. So my first demo simply recreates that. First, the front end:

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8">

<title></title>

<meta name="description" content="">

<meta name="viewport" content="width=device-width">

<style>

#testImage {

width:100%;

}

#swatches {

width: 100%;

height: 50px;

}

.swatch {

width:18%;

height: 50px;

border-style:solid;

border-width:thin;

float: left;

margin-right: 3px;

}

</style>

</head>

<body>

<button id="takePictureButton">Take a Picture</button><br/>

<img id="testImage">

<div id="swatches">

<div id="swatch0" class="swatch"></div>

<div id="swatch1" class="swatch"></div>

<div id="swatch2" class="swatch"></div>

<div id="swatch3" class="swatch"></div>

<div id="swatch4" class="swatch"></div>

</div>

<script src="color-thief.min.js"></script>

<script src="app2.js"></script>

</body>

</html>

Not much here - just the UI for taking a picture and the result, empty DOM items to be filled later. Now let's look at the code. (And let me be clear, I'm not doing good error handling here.)

let $button, $img, imageCapture;

document.addEventListener('DOMContentLoaded', init, false);

function init() {

$button = document.querySelector('#takePictureButton');

$img = document.querySelector('#testImage');

navigator.mediaDevices.getUserMedia({video: true})

.then(setup)

.catch(error => console.error('getUserMedia() error:', error));

}

function setup(mediaStream) {

$button.addEventListener('click', takePic);

$img.addEventListener('load', getSwatches);

const mediaStreamTrack = mediaStream.getVideoTracks()[0];

imageCapture = new ImageCapture(mediaStreamTrack);

}

function takePic() {

imageCapture.takePhoto()

.then(res => {

$img.src = URL.createObjectURL(res);

});

}

function getSwatches() {

let colorThief = new ColorThief();

var colorArr = colorThief.getPalette($img, 5);

console.dir(colorArr);

for (var i = 0; i < Math.min(5, colorArr.length); i++) {

document.querySelector('#swatch'+i).style.backgroundColor = "rgb("+colorArr[i][0]+","+colorArr[i][1]+","+colorArr[i][2]+")";

}

}

Essentially this is the same as the previous demo, in terms of getting access and working with takePhoto. The rest of the code then is the exact same as the demo from three years ago. When I detect a load event in the image, run Color Thief and get the swatches.

Here's a screen shot of it in action - yes - that's me.

And for comparison, here is myself with Mysterio. (Damnit Marvel, when will we see Mysterio on the big screen??)

Cool - but can we kick it up a notch?

Demo 2 #

After getting this to work, I thought, why not kick it up a notch? I mentioned earlier that you could grab frames from a video source. What if we could convert the static demo into a live video feed where color swatches were updated in real time? That would be seriously cool, and enterprise for sure, definitely enterprise.

So this turned out to be a bit trickier. In order to work with the images from the video stream, you have to copy them to a canvas, 'paint' it out, than use that to feed Color Thief. My solution uses an image that is off the view port (via CSS) and a canvas element made on the fly. (I could have probably done the image that way too.) I modified the front end like so:

<img id="testImage">

<video id="testVideo" autoplay></video>

As I said, that image is 'hidden' via CSS:

#testImage {

display:none;

}

And now the code:

let $button, $img, imageCapture, mediaStreamTrack, $video;

document.addEventListener('DOMContentLoaded', init, false);

function init() {

$button = document.querySelector('#startVideoButton');

$img = document.querySelector('#testImage');

$video = document.querySelector('#testVideo');

navigator.mediaDevices.getUserMedia({video: true})

.then(setup)

.catch(error => console.error('getUserMedia() error:', error));

}

function setup(mediaStream) {

$video.srcObject = mediaStream;

$img.addEventListener('load', getSwatches);

mediaStreamTrack = mediaStream.getVideoTracks()[0];

imageCapture = new ImageCapture(mediaStreamTrack);

setInterval(getFrame,300);

}

function getFrame() {

console.log('running interval');

imageCapture.grabFrame()

.then(blob => {

//console.log('im in the got frame part');

let $canvas = document.createElement('canvas');

$canvas.width = blob.width;

$canvas.height = blob.height;

$canvas.getContext('2d').drawImage(blob, 0,0, blob.width, blob.height);

$img.src = $canvas.toDataURL('image/png');

});

}

function getSwatches() {

let colorThief = new ColorThief();

var colorArr = colorThief.getPalette($img, 5);

for (var i = 0; i < Math.min(5, colorArr.length); i++) {

document.querySelector('#swatch'+i).style.backgroundColor = "rgb("+colorArr[i][0]+","+colorArr[i][1]+","+colorArr[i][2]+")";

}

}

Basically - I set the video source to the media stream I requested earlier. I got that code from this demo at Google.

I then use grabFrame and pass the result to the canvas tag I'm creating on the fly. I then update the image source from the canvas and the same code as before handles the load. I'm doing this ever 300 ms. I tried 200 ms and began getting errors in console so I figure 300 was a bit safer.

To be clear, there is probably a better way of doing this.

But the result is kinda cool...

If you want to try these demos yourself, be sure you're using Canary with the proper flags installed (or living in the future), and hit:

https://cfjedimaster.github.io/webdemos/camera_new_hotness/test1.html (first demo)

https://cfjedimaster.github.io/webdemos/camera_new_hotness/test2.html (just the camera)

https://cfjedimaster.github.io/webdemos/camera_new_hotness/test3.html (live video)

You can find the source code here: https://github.com/cfjedimaster/webdemos/tree/master/camera_new_hotness